Blox-Net

Generative Design-for-Robot-Assembly using VLM Supervision, Physics Simulation, and A Robot with Reset

1UC Berkeley 2Cornell

2025 IEEE International Conference on Robotics and Automation (ICRA), Atlanta, GA

Presenting Thursday at 3:25 pm in the Manufacturing and Assembly Processes Session (407)

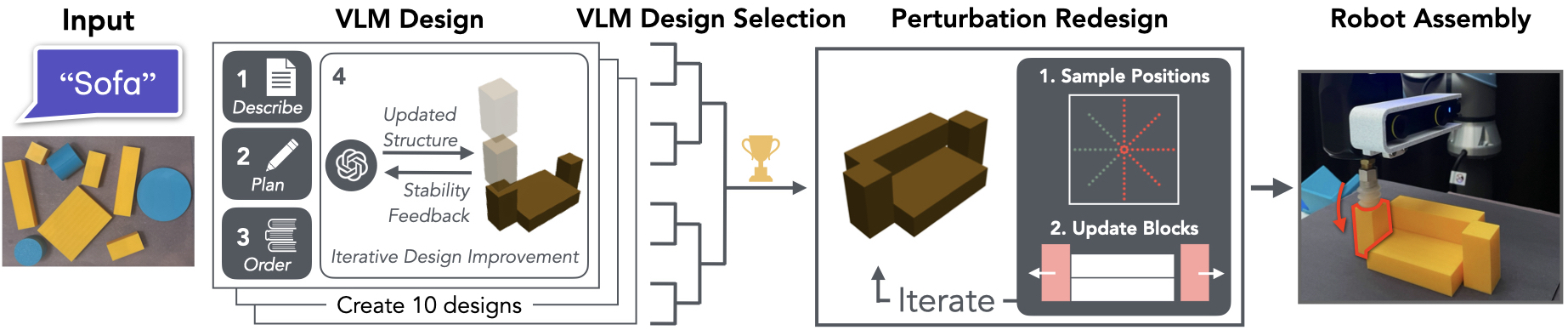

TL;DR: Blox-Net iteratively prompts a VLM with a simulation in-the-loop to generate designs based on a text prompt using a set of blocks and assembles them with a real robot!

Click the thumbnails below to watch Blox-Net build generated assemblies

Overview

Generative Design-for-Robot-Assembly (GDfRA) involves generating and physically constructing a design based on a natural language prompt (e.g., “giraffe”) and an image of available physical components. Blox-Net is a GDfRA system which leverages a Vision Language Model, physics simulator, and real robot to generate and reliably assemble designs resembling a text prompt from 3D printed blocks with no human input. Blox-Net achieves a 99.2% block placement success rate!

Blox-Net Design Generation

Blox-Net generates designs by sequentially prompting GPT-4o to develop a design plan and place blocks in a simulator. Then, GPT-4o receives visual and stability feedback from the simulator and makes adjustments. This process is performed 10 times in parallel and GPT-4o is used to select the design which looks the best. An automated perturbation redesign process introduces tolerances and improves stability to improve design constructability.

Additional Generation Examples

Arts in Robotics Program ICRA 2025

A closely related work analyzing Blox-Net's recreations of well-known art work will be presented at the ICRA '25 Art in Robotics Program. It considers the nature of creativity, authorship, and artistic expression in an age increasingly shaped by AI and robotics. Learn more: https://bloxnet.org/art

Citation

If you use this work or find it helpful, please consider citing:

@inproceedings{goldberg2025bloxnet,

title={Blox-Net: Generative Design-for-Robot-Assembly using VLM supervision, Physics, Simulation, and a Robot with Reset},

author = {Goldberg, Andrew and Kondap, Kavish and Qiu, Tianshuang and Ma, Zehan and Fu, Letian and Kerr, Justin and Huang, Huang and Chen, Kaiyuan and Fang, Kuan and Goldberg, Ken}

booktitle={2025 International Conference on Robotics and Automation (ICRA)},

year={2025},

organization={IEEE}

}